In the evolving landscape of immersive technologies, the term interpretation simulation has emerged as a central concept. It refers to the creation of dynamic, user‑driven narratives and experiences that adjust in real time to contextual cues, sensor data, and individual preferences. While virtual reality (VR), augmented reality (AR), and the broader metaverse provide the playgrounds for such simulations, each medium offers unique affordances that shape how interpretation unfolds. This article explores how interpretation simulation is being realized across these platforms, the underlying technologies, and the opportunities and challenges that lie ahead.

What Is Interpretation Simulation?

At its core, interpretation simulation blends three pillars: data input, algorithmic modeling, and immersive output. Data can come from environmental sensors, biometric trackers, or user interactions. Algorithms—often rooted in machine learning and procedural generation—process this information to generate meaningful content or alter existing elements. The immersive output then presents the resulting narrative or environment to the user. The simulation is “interpretation” because it transforms raw data into a coherent story or context, and it is “simulation” because it is a real‑time, interactive representation rather than a static artifact.

Interpretation simulation is not a new idea; early virtual worlds employed simple rule‑based systems to adapt to player actions. However, the current generation of AI, high‑fidelity rendering, and cloud computing allows for far richer, more nuanced simulations that can interpret complex human behavior and environmental dynamics.

Virtual Reality: The Immersive Sandbox

Virtual reality offers the most complete sensory immersion, allowing users to inhabit fully constructed worlds. In VR, interpretation simulation can be used to create adaptive storylines that respond to a player’s gaze, hand gestures, and even heart rate. For example, a horror game might intensify ambient noise and visual distortions when the system detects elevated heartbeats, thereby creating a more authentic sense of dread.

- Procedural Environments: Algorithms generate terrain, architecture, and flora on the fly, ensuring that each playthrough offers fresh experiences.

- Emotion Recognition: Facial‑tracking and biometric data feed into models that adjust dialogue, pacing, and difficulty to match the player’s emotional state.

- Collaborative Interpretation: In multi‑user VR spaces, simulations can interpret group dynamics, allowing NPCs or narrative arcs to evolve based on collective decisions.

These techniques turn VR from a static showcase into a living, breathing narrative engine where the user is both audience and author.

Augmented Reality: Layering Meaning on the Real World

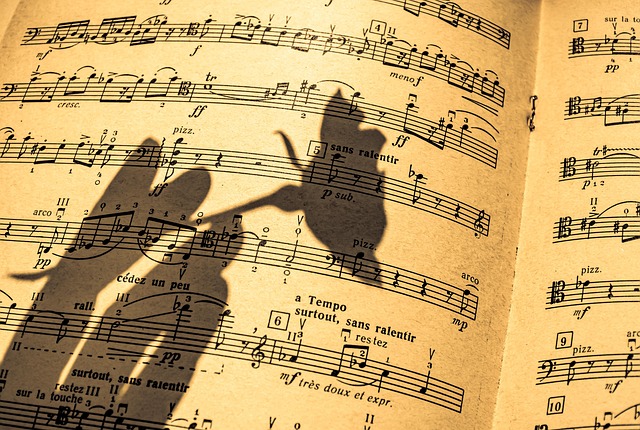

Augmented reality superimposes digital information onto the physical environment. Interpretation simulation in AR focuses on contextual relevance—presenting data that makes sense in the immediate surroundings. A museum visitor might receive an AR overlay that explains the provenance of a painting, while also responding to the visitor’s movement through the exhibit, adjusting lighting cues to guide attention.

Key Mechanisms in AR Interpretation

- Spatial Mapping: LiDAR and depth cameras capture a 3D map of the environment, allowing virtual objects to anchor accurately.

- Semantic Segmentation: Image recognition classifies surfaces and objects, enabling context‑aware overlays (e.g., a product label appearing only when the user looks at a specific shelf).

- Personalization Loops: User preferences stored in the cloud inform the content that surfaces, creating a tailored informational experience.

By merging physical and digital layers, AR turns everyday scenes into data‑rich storytelling canvases, where interpretation simulation bridges the gap between context and content.

The Metaverse: Persistent Interpretation at Scale

The metaverse envisions a persistent, interconnected digital universe where users can move freely between virtual spaces. Interpretation simulation in this realm must handle continuity across sessions, diverse user profiles, and real‑time data streams from multiple devices.

- Cross‑Platform Data Fusion: Aggregating data from VR headsets, AR glasses, and mobile devices to build a unified user profile.

- Dynamic World States: Procedural generation that adapts to global events—such as a simulated storm that affects all users in a shared city.

- Social Interpretation: Analyzing interaction patterns to adjust community norms, virtual economies, and narrative arcs.

In the metaverse, interpretation simulation becomes a continuous layer of meaning, ensuring that every encounter feels personal yet interconnected within a larger ecosystem.

Foundational Technologies Behind Interpretation Simulation

Realizing sophisticated interpretation simulation requires a stack of hardware, software, and data infrastructures:

Hardware

- High‑resolution head‑mounted displays with low latency.

- Motion trackers and eye‑tracking cameras.

- Biometric sensors (heart rate, galvanic skin response).

Software

- Game engines (Unreal Engine, Unity) extended with procedural generation plugins.

- Machine learning frameworks (TensorFlow, PyTorch) for real‑time inference.

- Cloud services for data aggregation, model hosting, and multiplayer networking.

Data Pipelines

Interpretation simulation thrives on high‑volume, low‑latency data streams. Edge computing helps preprocess sensor inputs, while secure cloud backends support collaborative models and persistent state management.

Challenges and Ethical Considerations

While the potential of interpretation simulation is immense, several hurdles must be addressed:

- Privacy: Continuous biometric and behavioral data raise concerns about consent, data ownership, and potential misuse.

- Bias in Models: Machine learning systems can reinforce stereotypes if trained on unrepresentative datasets.

- Human‑Computer Interaction Limits: Over‑automation might erode agency, making users feel controlled rather than empowered.

- Technical Bottlenecks: Latency and bandwidth constraints can break immersion, especially in large, persistent worlds.

Addressing these issues requires multidisciplinary collaboration among technologists, ethicists, policymakers, and end‑users.

Future Horizons of Interpretation Simulation

Looking ahead, several trends point toward more sophisticated and ubiquitous interpretation simulation:

- Neural‑interface devices that decode intention directly from neural signals.

- Edge AI chips that enable instantaneous local inference, reducing reliance on cloud latency.

- Standardized data protocols that allow seamless interoperability across VR, AR, and metaverse platforms.

- Hybrid storytelling frameworks that blend scripted narratives with emergent, data‑driven plotlines.

As these technologies mature, interpretation simulation will likely become a foundational layer in all immersive experiences, shaping how we learn, collaborate, and entertain in the digital age.