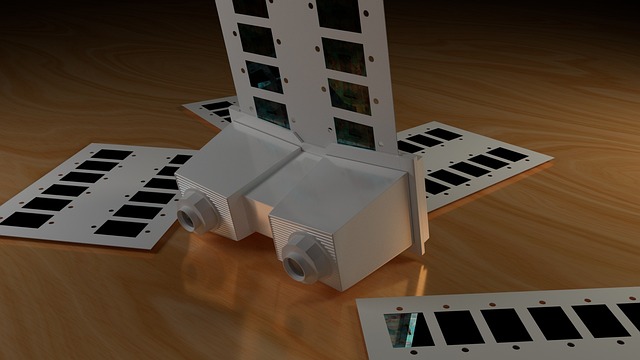

In recent years, the intersection of virtual reality (VR), augmented reality (AR), and the broader metaverse has moved from speculative fiction into everyday reality. At the core of this transition lies a growing emphasis on hardware that delivers more convincing, immersive, and interactive experiences. Among the most promising developments is the resurgence of the stereoscope—a term that once described a simple optical device but now refers to sophisticated systems that create a deeply realistic perception of depth and spatial context for users.

What is a Modern Stereoscope?

Traditionally, a stereoscope used two separate lenses to provide each eye with a slightly different image, thus creating a three‑dimensional perception. In the context of VR and AR, a modern stereoscope refers to any hardware configuration that combines eye tracking, adaptive lenses, and high‑fidelity displays to deliver a true stereoscopic experience. These systems are engineered to minimize latency, enhance field of view, and adjust to individual visual acuity, thereby reducing the common discomforts associated with head‑mounted displays.

Key Components of Contemporary Stereoscopic Systems

- High‑Resolution Microdisplays: MicroLED and OLED panels now offer pixel densities exceeding 5,000 pixels per inch, enabling crisp images without the “screen door” effect that once plagued early headsets.

- Dynamic Eye‑Tracking: By continuously monitoring gaze direction, these systems can apply foveated rendering—allocating computational resources only to the portion of the image the user is actually looking at, thus improving performance and realism.

- Adaptive Optics: Liquid‑lens arrays adjust focus in real time to match the viewer’s eye, eliminating the need for static lenses and allowing for variable field of view that feels more natural.

- Low‑Latency Sensor Fusion: Combining inertial measurement units (IMUs), optical trackers, and event‑based cameras ensures motion is rendered with sub‑millisecond delay, crucial for maintaining immersion and preventing motion sickness.

- Spatial Audio Integration: Multi‑channel surround sound paired with head‑related transfer function (HRTF) modeling creates a convincing three‑dimensional audio environment that matches the visual stereoscopic cues.

Hardware Advancements Driving the Metaverse

While software continues to innovate in terms of content and user interfaces, hardware has become the bottleneck for scaling immersive experiences. Below are the most influential hardware trends shaping the metaverse ecosystem.

1. Light‑Field Displays

Light‑field technology captures the direction and intensity of light rays, allowing the display to reconstruct a volumetric image that moves naturally as the viewer changes position. This reduces the need for eye‑tracking adjustments and provides a more lifelike depth perception that traditional stereoscopic displays cannot match.

2. Haptic Feedback Ecosystems

Beyond visual and auditory cues, tactile sensations are vital for a fully embodied experience. Wearable haptic suits, smart gloves, and even pressure‑sensitive floor panels now deliver fine‑grained feedback that responds to virtual interactions, thereby reinforcing the stereoscopic perception of the environment.

3. Neural Interface Integration

Early research into brain‑computer interfaces (BCIs) has yielded low‑latency, non‑invasive sensors that can detect motor intentions and eye movements with remarkable precision. By feeding these signals directly into the rendering pipeline, future stereoscopes could anticipate user actions and pre‑render frames, dramatically reducing perceptible lag.

4. Energy‑Efficient Power Management

Prolonged usage requires efficient power consumption. Innovations such as micro‑LEDs, advanced battery chemistries, and adaptive refresh rates ensure that devices can operate for extended periods without sacrificing image quality or immersion.

Case Studies: Leading Stereoscopic Hardware Solutions

Several manufacturers are pushing the envelope in creating stereoscopic hardware that meets the demands of VR, AR, and metaverse applications. These case studies illustrate the practical benefits and challenges of each approach.

Case Study A – Ultra‑Wide FOV Headsets

“The new 210‑degree field of view eliminates the feeling of being confined within a box, making the virtual world feel like an actual extension of the real world.” – Product Lead, Visionary XR

This headset uses a dual‑microdisplay setup paired with a lightweight polymer housing. The adaptive optics system allows the user to zoom into objects without distortion, providing a depth experience that is both natural and comfortable over long sessions.

Case Study B – Modular AR Glasses

“By decoupling the optical components from the processing unit, developers can update software without hardware upgrades, extending the product’s lifespan.” – CTO, HoloFlex Inc.

These glasses feature a micro‑projector that overlays virtual content onto the real world. The stereoscopic effect is achieved through a combination of high‑resolution displays and a sophisticated eye‑tracking module that adjusts the image in real time.

Case Study C – Integrated Haptic Suites

“Physical feedback is the missing piece in creating a truly immersive metaverse. Our suite bridges the gap between sight and touch.” – Lead Engineer, Sensory Dynamics

The haptic suit incorporates pressure sensors, vibration motors, and temperature controls that map directly onto the user’s body. Combined with a stereoscopic headset, the system delivers a cohesive, multi‑sensory environment.

Challenges and Solutions in Stereoscopic Hardware Design

Despite remarkable progress, several hurdles remain. Addressing these challenges will be key to mainstream adoption of stereoscopic VR and AR hardware.

1. Weight and Ergonomics

Headsets that provide high‑resolution displays and sophisticated optics often become bulky. Designers are exploring new materials like graphene composites and 3D‑printed structural lattices to reduce mass while maintaining rigidity.

2. Latency Management

Even minimal delays can break immersion. Multi‑core processors, dedicated graphics units, and edge computing are being employed to process sensor data and render frames as quickly as possible. Future systems will likely use predictive algorithms to pre‑compute likely user actions.

3. Cost Constraints

High‑end components such as microLED panels and adaptive optics remain expensive. Economies of scale, modular design, and open‑source firmware are strategies that can bring down costs over time, making stereoscopic hardware more accessible.

4. Software Compatibility

Developers need standardized APIs to harness the capabilities of new hardware. Initiatives like OpenXR and Vulkan are working to unify hardware access across devices, but continued collaboration between hardware vendors and software communities is essential.

The Future Landscape: Stereoscopic Hardware Meets the Metaverse

Looking ahead, the synergy between hardware innovations and the metaverse’s evolving demands will drive the next generation of immersive experiences. Several trends are emerging that promise to reshape the way we interact with virtual worlds.

1. Hybrid Reality Platforms

Future systems will blur the line between VR and AR, offering seamless transitions based on user intent or context. Stereoscopic hardware that can shift between fully immersive, isolated experiences and augmented overlays will become the norm.

2. Personalization at Scale

Adaptive optics and eye‑tracking allow devices to tailor visual fidelity to each user’s specific visual profile. Machine learning algorithms will predict optimal rendering settings, ensuring consistent comfort across diverse populations.

3. Environmental Integration

Smart environments—homes, offices, public spaces—will embed stereoscopic displays and sensors into furniture and walls. Users can interact with virtual objects that appear to exist within the physical space, creating truly mixed realities.

4. Ethical and Social Considerations

With deeper immersion comes greater responsibility. Hardware that accurately replicates the human visual and tactile senses must be designed with safeguards against misuse, ensuring that the metaverse remains a safe, inclusive space.

Conclusion

The evolution of stereoscopic hardware has moved beyond the realm of niche hobbyist gear into mainstream consumer technology. By combining high‑resolution displays, eye‑tracking, adaptive optics, and haptic feedback, modern stereoscopes deliver depth perception that feels almost indistinguishable from reality. As the metaverse expands, these hardware advancements will be essential for creating believable, interactive worlds that people can inhabit, collaborate, and explore. The journey from simple optical devices to sophisticated, multimodal immersion systems underscores the transformative power of hardware innovation in shaping the future of virtual and augmented experiences.